MIT survey to establish moral guidelines for autonomous vehicle control

November 07, 2018

on

on

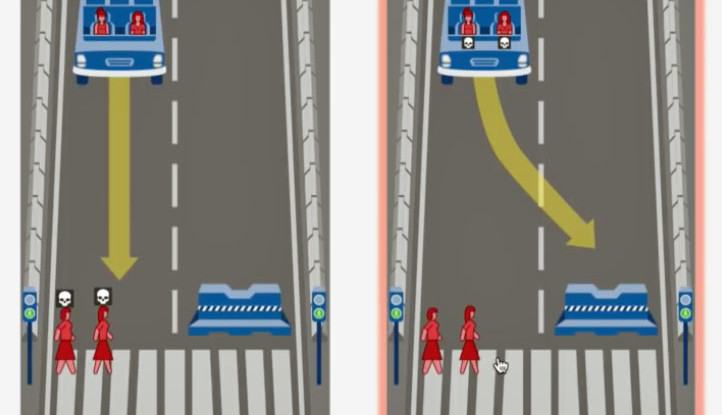

How should an autonomously-controlled vehicle react in a situation where lives are at stake? Millions of participants from over 200 countries have taken part in a survey which was first put online by MIT in 2014. Participants were presented with ethical dilemmas that will need to be resolved by the control system of an autonomous vehicle. In principle, when an accident threatens, the car must choose one of two potentially lethal alternatives.

Using the 40 million responses from participants in 233 countries it was established that autonomous vehicles should:

• Give precedence to saving the life of a human over any other creature.

• Take a course of action that would spare as many lives as possible.

• Spare younger people over more elderly people.

The survey itself is based on the results of a multilingual online game called the ‘Moral Machine’ – where players are asked to indicate their preferences when faced with certain predicaments. One example is: Should an autonomous vehicle spare the life of a law-abiding pedestrian in favour of a careless jaywalker? This one was pretty clear cut with most participants wanting to spare the former.

Autonomous vehicles need ethical guidelines. Video: mit.edu

So far, the survey has provided nearly 40 million individual responses from across 233 countries. Around 500,000 of the participants chose also to take part in a demographic survey to categorize their age, education, gender and income as well as political and religious views. Researchers found that they could not identify any significant trends associated with any of the demographic characteristics but the participant’s country of origin played a significant role in their ethical reasoning. Responses from participants living in Taiwan, China and South Korea (where the elderly are venerated) for example, put the least emphasis on sparing the young in a life-threatening event while those living in France, Greece, Canada and the UK put the most emphasis on saving them.

The survey results are important and before engineers program autonomous vehicles to make ethical choices we need to participate in a global discussion to ensure preferences and universally-agreed guidelines are incorporated in the control algorithm design. This research entitled ‘The Moral Machine experiment’ was published in the journal Nature.

Using the 40 million responses from participants in 233 countries it was established that autonomous vehicles should:

• Give precedence to saving the life of a human over any other creature.

• Take a course of action that would spare as many lives as possible.

• Spare younger people over more elderly people.

The survey itself is based on the results of a multilingual online game called the ‘Moral Machine’ – where players are asked to indicate their preferences when faced with certain predicaments. One example is: Should an autonomous vehicle spare the life of a law-abiding pedestrian in favour of a careless jaywalker? This one was pretty clear cut with most participants wanting to spare the former.

So far, the survey has provided nearly 40 million individual responses from across 233 countries. Around 500,000 of the participants chose also to take part in a demographic survey to categorize their age, education, gender and income as well as political and religious views. Researchers found that they could not identify any significant trends associated with any of the demographic characteristics but the participant’s country of origin played a significant role in their ethical reasoning. Responses from participants living in Taiwan, China and South Korea (where the elderly are venerated) for example, put the least emphasis on sparing the young in a life-threatening event while those living in France, Greece, Canada and the UK put the most emphasis on saving them.

The survey results are important and before engineers program autonomous vehicles to make ethical choices we need to participate in a global discussion to ensure preferences and universally-agreed guidelines are incorporated in the control algorithm design. This research entitled ‘The Moral Machine experiment’ was published in the journal Nature.

Read full article

Hide full article

Discussion (0 comments)