New search engine queries sensor networks

on

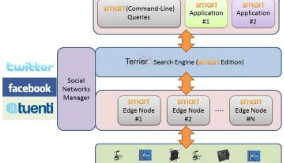

A new search engine designed to query sensor networks in order to provide real-time answers to questions about the physical world is being developed by a project dubbed ‘Search engine for MultimediA Environment geneRated content’ (SMART). The project aims to develop and implement an open source system that enables Internet users to search and analyse data from any network of sensors.

By matching search queries with information from sensors and cross-referencing data from social networks such as Twitter, the search engine will enable users to receive detailed responses to questions such as ‘What part of the city hosts live music events which my friends have been to recently?’ or ‘How busy is the city centre?’ Currently, standard search engines such as Google are not able to answer search queries of this type.

SMART will develop a scalable search and retrieval architecture for multimedia data, along with intelligent techniques for the real-time processing, search and retrieval of physical world multimedia. The SMART framework will boost scalability in both functional and business terms, while being extensible in terms of sensors and multimedia data processing algorithms.

Matching will be based on sensor context and metadata (e.g. location, status and capabilities), as well as the dynamic context of the physical world as perceived by processing algorithms (such as face detectors, person trackers, acoustic event classifiers and components for crowd analysis). SMART will also be able to leverage information from Web 2.0 social networks in order to support social queries of physical world multimedia.

Image: SMART

Discussion (0 comments)