The difference between a good robot and an evil robot is one line of code

on

Winfield has devoted many years to the development of ethical robots. In an interview with Elektor Ethics in 2016, he talked about an experiment where he programmed a robot to save people. The robot was able to evaluate the consequences of possible actions before carrying out any action. A hard-coded ‘ethical rule’ in the software ensured that the robot would always perform the action that saved human life – even if that caused harm to the robot. At that time, Winfield regarded this as a way to ensure the safety of people now that robots are impinging more and more on our daily lives.

But now Winfield has performed a new experiment that casts doubt on his previous conclusions.

From good to evil in just one line

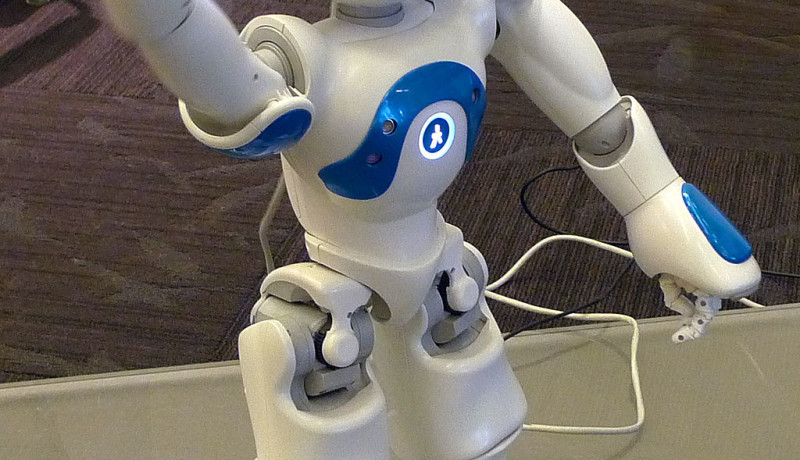

In order for an ethical robot to achieve the best possible outcome for people, it must know the goals and preferences of people. But this intimate knowledge actually makes the robot a risk factor, because it can also be used to take advantage of people. In a paper published by Winfield together with his academic colleague Dieter Vanderelst from the University of Cincinnati (USA), this is clearly illustrated by a simple experiment.A person is playing a shell game with a gambler. If the player guesses which shell the ball is under, they double their ante. Otherwise they lose. A robot named Walter helps the player by evaluating their behaviour. If they head toward the right shell, the robot does nothing. But if they head toward the wrong one, Walter points in the right direction. In order to provide this help, Walter needs to know a lot of things: the rules of the game, the right answer, and what the person wants – which is to win. But this knowledge can be used in other ways. In the words of Winfield and Vanderelst:

“The cognitive machinery Walter needs to behave ethically supports not only ethical behaviour. In fact, it requires only a trivial programming change to transform Walter from an altruistic to an egoistic machine. Using its knowledge of the game, Walter can easily maximize its own takings by uncovering the ball before the human makes a choice. Our experiment shows that altering a single line of code [...] changes the robot’s behaviour from altruistic to competitive.”

Assuming responsibility

Of course, it’s not the robot that turns against the person, because a robot does not have its own will. As Winfield and Vanderelst point out, the robot has to be corrupted by someone else. For example, an unscrupulous manufacturer or a malevolent cybercriminal could manipulate the software to the detriment of the user. The authors conclude that the risks are so great that it is probably not wise to develop robots with mechanisms for ethical decision making.With this paper it looks like Winfield is saying farewell to his experiments with ethical robots. However, his research on ethical robots is much broader. He has always said that people, not robots, are responsible for the impact of these machines. Now that a technological solution appears to be a dead end, it is more important than ever to assume our responsibility. “Now is the time to lay the foundations of a governance and regulatory framework for the ethical deployment of robots in society”, conclude the authors.

This experiment also shows that a set of ethical rules is no substitute for moral awareness.

Discussion (0 comments)