Quadruped Robots

March 21, 2023

on

on

Quadruped robots are robots designed to move on four legs, similar to how animals like dogs and cats move. These robots are designed to perform a variety of tasks and have numerous applications, such as search and rescue, military operations, inspection and maintenance, and entertainment.

Uniqueness

They are have particular perks compared to other types of robots because they have the ability to navigate complex and irregular terrains with relative ease. This is due to their four-legged design, which provides stability and balance, and allows them to traverse obstacles such as stairs, rough terrain, and narrow passages. This ability makes them ideal for use in situations where traditional wheeled or bipedal robots may struggle, such as in disaster zones and other hazardous environments.

There are several different types of quadruped robots, each with its own unique design and capabilities. Some of the most common types include statically stable robots, dynamically stable robots, and robots that use a hybrid of both static and dynamic stability.

Area of Use

Currently, quadruped robots are being used for a variety of tasks, such as search and rescue, military operations, and inspection and maintenance. They are also being developed for use in entertainment, such as for use in film and television production.

Quadruped Robots Design

One of the key factors that enables quadruped robots to move and perform tasks is their leg design. Quadruped robots typically have four legs, each with multiple joints that allow for a wide range of motion. The legs are controlled by actuators, which are devices that convert electrical signals into mechanical movement. The actuators in the legs of a quadruped robot are controlled by the onboard computer, which uses algorithms to calculate the appropriate movements for each joint.

Quadruped Gaits

In terms of movement, there are several different types of gait patterns that can be used by quadruped robots. Gaits are the specific patterns of movement that a quadruped robot uses to move from one place to another. Some of the most common gait patterns used by quadruped robots include walking, running, and trotting. The choice of gait pattern depends on the specific task that the robot is performing and the conditions of the environment.Quadruped gaits refer to the specific patterns of movement used by quadruped robots to move from one place to another. These gaits are important because they determine how the robot moves, and can impact its efficiency, stability, and speed. In this article, we will examine the different types of quadruped gaits and how they are used by robots.

One of the most common gaits used by quadruped robots is the walking gait. This gait is used for slow and steady movement, and is characterized by alternating diagonal pairs of legs moving forward. This allows the robot to maintain balance and stability as it moves, and is used for tasks that require stability and precision, such as carrying objects or navigating uneven terrain.

Another common gait used by quadruped robots is the running gait. This gait is used for faster movement and is characterized by a bounding pattern where the robot alternates between having three legs in the air and one leg on the ground. This gait allows the robot to cover ground quickly, but sacrifices some stability and precision compared to the walking gait.

A third type of gait used by quadruped robots is the trotting gait. This gait is characterized by diagonal pairs of legs moving forward, similar to the walking gait, but at a faster pace. The trotting gait allows the robot to cover ground quickly while maintaining some stability and precision, making it a good choice for tasks that require a combination of speed and stability.

In addition to these three common gaits, there are also other types of gaits that can be used by quadruped robots. For example, some robots use a bounding gait, which involves alternating between two legs in the air and two legs on the ground. This gait is used for high-speed movement and can be used for tasks such as running and jumping.

Here is an example of gait generation for quadruped robots using a simple tripod gait pattern:

function tripodGait(t, stance_duration, swing_duration, step_height)

% t: time

% stance_duration: duration of the stance phase

% swing_duration: duration of the swing phase

% step_height: height of each step

% Initialize gait

gait = zeros(1, 4);

% Compute the stance phase

if mod(t, stance_duration + swing_duration) < stance_duration

gait(1:3) = 0;

gait(4) = step_height;

else

% stance_duration: duration of the stance phase

% swing_duration: duration of the swing phase

% step_height: height of each step

% Initialize gait

gait = zeros(1, 4);

% Compute the stance phase

if mod(t, stance_duration + swing_duration) < stance_duration

gait(1:3) = 0;

gait(4) = step_height;

else

gait(1:3) = step_height;

gait(4) = 0; end

% Return the gait gait

% Return the gait gait

end

In this example, the tripod gait consists of two phases: the stance phase and the swing phase. The stance phase is when three of the legs are on the ground, while the swing phase is when one leg is in the air. The gait is generated by alternating the legs that are on the ground and in the air based on the current time and the specified durations for the stance and swing phases. The height of each step is specified as step_height.

This simple gait generation example is just the starting point for more advanced gait patterns and algorithms for quadruped robots. More sophisticated gait patterns can be developed to improve the stability, efficiency, and adaptability of quadruped robots in different environments and tasks.

General Overview on Operation

In terms of operation and control, these robots are typically controlled by a combination of onboard computers and external control systems. The onboard computers use sensors and algorithms to control the robot's movements, while the external control system provides commands and feedback to the robot. Some quadruped robots are also equipped with cameras and other sensors, which can be used to gather information about their environment and make decisions about how to navigate it.A watered-down example of the working process for a quadruped robot can be taken by considering the following. We have a central control box (an industrial PC or perhaps a raspberry pi) that receives information from several hardware components.

One of the vital sources of information that comes from the components are from the motors which provide feedback data such as torque and position.

Another vital source of information is from the IMU that provides data from a gyroscope and an accelerometer which when computed provides the 3D spatial information of the robot such as roll, pitch, and yaw.

These two sources of dynamic information (that changes when the robots moves) are then used in combination with static known information of the robot (such as centre of gravity, mass, volume, inertia, etc.) to compute estimations of stability for the robot which is sufficient for basic control of the robot.

The computation often involves modeling the entire dynamic and static control behavior of the robot for stability when stationary as well as when moving. This a highly complex and time consuming process. A common approach to modeling a dynamic controller is to use a combination of rigid body dynamics, control theory, and machine learning techniques.

A simple example of a dynamic controller for a quadruped robot could be modeled as follows:

The computation often involves modeling the entire dynamic and static control behavior of the robot for stability when stationary as well as when moving. This a highly complex and time consuming process. A common approach to modeling a dynamic controller is to use a combination of rigid body dynamics, control theory, and machine learning techniques.

A simple example of a dynamic controller for a quadruped robot could be modeled as follows:

- Modelling the robot's dynamics: The dynamics of the robot are described using a set of mathematical equations that describe the motion of each body segment in terms of its position, velocity, and acceleration. These equations take into account the robot's mass, moment of inertia, and forces acting on it, such as gravity, friction, and actuator forces.

- Estimating the robot's state: The state of the robot is estimated using sensors such as accelerometers, gyroscopes, and encoders. These sensors provide information about the robot's orientation, velocity, and position.

- Designing the controller: The controller is designed using a combination of control algorithms, such as proportional-integral-derivative (PID) control, linear quadratic regulator (LQR), and model predictive control (MPC). These algorithms take into account the estimated state of the robot and its desired trajectory, and generate control signals to regulate the motion of the robot.

- Implementing the controller: The controller is implemented in software, either on the robot itself or on a separate computer, and communicated to the robot over a network or directly to the actuators.

- Validating the controller: The controller is validated by performing experiments on the robot, either in simulation or on the real robot, and comparing the results to the desired behavior. The controller may be refined and re-designed based on the results of these experiments.

A more recent and innovative method that bypasses the modelling of dynamic behaviors for the controller is by the use of reinforcement learning in which the motor and imu information is provided and stability is acquired by reward functions. However, it is not a magic bullet and requires significant tuning and processing power to generate valid movement.

Delving slightly deeper into control methods, we discuss:

- Model-Predictive Controls (MPC) for Quadrupeds

- Reinforcement Learning (RL) for Quadrupeds

MPC Quadrupeds

Model Predictive Control (MPC) is a control method that has been widely used in various fields, including robotics. In the context of quadrupedal robots, MPC can be used to control the motion of the robot in real-time by predicting its future states and choosing actions that minimize a cost function.In MPC, a mathematical model of the quadrupedal robot is used to predict its future states based on the current state and the control inputs. The control inputs are then chosen such that a cost function, which represents the objectives of the task, is minimized over a finite time horizon. The prediction and control optimization are performed at a high frequency, typically several times per second, making MPC well- suited for real-time control.

One of the advantages of MPC is that it can handle complex constraints, such as joint limits and torque limits, which are often present in quadrupedal robots. It also allows for the integration of various objectives, such as stability, efficiency, and comfort, into a single cost function, making it possible to balance trade-offs between these objectives.

However, the implementation of MPC for quadrupedal robots can be challenging, due to the high computational cost and the difficulty in obtaining accurate models of the robot's dynamics. Researchers have addressed these challenges by using techniques such as linearization, reduced-order models, and fast optimization algorithms.

RL Quadrupeds

One of the challenges in using RL with quadrupedal robots is the high-dimensional state space, which makes it difficult for the agent to learn efficiently. Academics have addressed this challenge by using techniques such as model-based RL, which uses a model of the environment to plan actions, and actor-critic algorithms, which separate the learning of the policy (what actions to take) from the value function (how good a state is).

Another challenge is that quadrupedal robots have complex and highly dynamic systems, which can make it difficult to learn robust and generalizable policies. To overcome this challenge, researchers have used methods such as curriculum learning, where the agent starts with simple tasks and gradually progresses to more complex ones, and transfer learning, where knowledge learned from one task is used to improve performance on another task.

The most advanced controllers utilize hybrid models utilize both predictive controls as well deep learning models for controlling the quadrupeds.

Quadruped Applications

Quadruped Waypoint Navigation

Waypoint navigation refers to the process of guiding a robot from its current location to a desired goal or set of goals, represented by a sequence of waypoints. In the context of quadrupedal robots, waypoint navigation is a common task that involves controlling the motion of the robot to follow a desired path, while avoiding obstacles and other constraints.

Waypoint navigation can be accomplished through various methods, including motion planning algorithms, which determine the optimal path based on a representation of the environment, and feedback control, which adjusts the robot's motion based on its current state and a desired trajectory.

Waypoint navigation can be accomplished through various methods, including motion planning algorithms, which determine the optimal path based on a representation of the environment, and feedback control, which adjusts the robot's motion based on its current state and a desired trajectory.

One of the challenges in waypoint navigation for quadrupedal robots is dealing with the high dimensionality and complexity of their state space, which makes it difficult to find optimal paths and control the robot's motion in real-time. Academics have addressed this challenge by using techniques such as motion planning algorithms based on sampling and optimization, motion primitives, and trajectory optimization.

Another challenge is the ability to handle changes in the environment, such as moving obstacles, that may occur while the robot is navigating. Researchers have addressed this challenge by using reactive or adaptive control methods that allow the robot to update its path in real-time based on its current state and the changing environment.

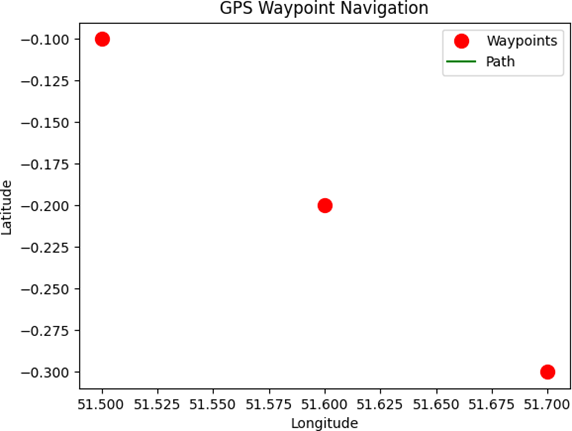

A naive example that can be tried out for GPS navigation is as follows:

import numpy as np

import matplotlib.pyplot as plt

# Define the waypoints as a list of GPS coordinates

waypoints = np.array([[51.5, -0.1], [51.6, -0.2], [51.7, -0.3]])

# Plot the waypoints on a map

plt.plot(waypoints[:,0], waypoints[:,1], 'ro', markersize=10, label='Waypoints')

plt.legend(loc='best')

# Calculate the distance between each waypoint

distances = np.zeros(waypoints.shape[0]-1)

for i in range(len(distances)):

distances[i] = np.linalg.norm(waypoints[i+1] - waypoints[i])

# Define the starting position

current_position = np.array([51.5, -0.1])

# Define the speed (in km/h)

speed = 50

# Define the time step (in hours)

dt = 0.1

# Define the desired arrival time (in hours)

arrival_time = 4

# Define the tolerance for reaching the waypoint

tolerance = 0.1

# Initialize the time

time = 0

# Initialize the waypoint index

waypoint_index = 0

# Initialize the path

path = [current_position]

# Start the navigation loop

while time < arrival_time:

# Calculate the distance to the current waypoint

distance_to_waypoint = np.linalg.norm(waypoints[waypoint_index] - current_position)

# Check if we have reached the current waypoint

if distance_to_waypoint < tolerance:

# Move to the next waypoint

waypoint_index += 1

# Check if we have reached the final waypoint

if waypoint_index == waypoints.shape[0]:

# End the loop

break

# Calculate the direction to the current waypoint

if distance_to_waypoint == 0:

break

direction = (waypoints[waypoint_index] - current_position) / distance_to_waypoint

# Calculate the velocity

velocity = speed * direction

# Update the current position

current_position += velocity * dt

# Update the time

time += dt

# Add the current position to the path

path.append(current_position)

# Plot the final path

path = np.array(path)

plt.plot(path[:,0], path[:,1], '-g', label='Path')

plt.legend(loc='best')

# Add labels and title to the plot

plt.xlabel('Longitude')

plt.ylabel('Latitude')

plt.title('GPS Waypoint Navigation')

plt.show()

import matplotlib.pyplot as plt

# Define the waypoints as a list of GPS coordinates

waypoints = np.array([[51.5, -0.1], [51.6, -0.2], [51.7, -0.3]])

# Plot the waypoints on a map

plt.plot(waypoints[:,0], waypoints[:,1], 'ro', markersize=10, label='Waypoints')

plt.legend(loc='best')

# Calculate the distance between each waypoint

distances = np.zeros(waypoints.shape[0]-1)

for i in range(len(distances)):

distances[i] = np.linalg.norm(waypoints[i+1] - waypoints[i])

# Define the starting position

current_position = np.array([51.5, -0.1])

# Define the speed (in km/h)

speed = 50

# Define the time step (in hours)

dt = 0.1

# Define the desired arrival time (in hours)

arrival_time = 4

# Define the tolerance for reaching the waypoint

tolerance = 0.1

# Initialize the time

time = 0

# Initialize the waypoint index

waypoint_index = 0

# Initialize the path

path = [current_position]

# Start the navigation loop

while time < arrival_time:

# Calculate the distance to the current waypoint

distance_to_waypoint = np.linalg.norm(waypoints[waypoint_index] - current_position)

# Check if we have reached the current waypoint

if distance_to_waypoint < tolerance:

# Move to the next waypoint

waypoint_index += 1

# Check if we have reached the final waypoint

if waypoint_index == waypoints.shape[0]:

# End the loop

break

# Calculate the direction to the current waypoint

if distance_to_waypoint == 0:

break

direction = (waypoints[waypoint_index] - current_position) / distance_to_waypoint

# Calculate the velocity

velocity = speed * direction

# Update the current position

current_position += velocity * dt

# Update the time

time += dt

# Add the current position to the path

path.append(current_position)

# Plot the final path

path = np.array(path)

plt.plot(path[:,0], path[:,1], '-g', label='Path')

plt.legend(loc='best')

# Add labels and title to the plot

plt.xlabel('Longitude')

plt.ylabel('Latitude')

plt.title('GPS Waypoint Navigation')

plt.show()

This code uses the numpy library to perform calculations and the matplotlib library to plot the waypoints and final path on a map. The while loop runs until the desired arrival time is reached, and at each iteration the code calculates the distance to the current waypoint, moves to the next waypoint if the tolerance is reached, updates the current position and time based on the velocity and time step, respectively. The final path is plotted on a map using the matplotlib library.

Inspection task based on 2D or 3D LiDAR & FLIR Thermal Camera

Inspection tasks based on 2D or 3D LiDAR and FLIR thermal cameras can provide an effective and efficient solution for a wide range of industrial and commercial applications, such as building inspections, maintenance of infrastructure, and quality control.LiDAR (Light Detection and Ranging) sensors use laser light to measure distances and create a point cloud representation of the environment, providing high-resolution 3D information about the environment. When combined with a thermal camera, such as a FLIR (Forward Looking Infrared) camera, the system can provide both detailed 3D information and thermal imaging, allowing for a comprehensive analysis of the environment.

In an inspection task, the LiDAR and thermal camera system can be mounted on a robotic platform, such as a drone or a ground-based robot, and be used to survey the environment and gather data about the objects and surfaces within it. This data can then be processed and analyzed to identify potential issues, such as structural damage or heat loss in a building, which can be used to prioritize maintenance and repair work.

One of the advantages of using a LiDAR and thermal camera system for inspection tasks is the ability to cover large areas quickly and accurately, reducing the time and cost of manual inspection methods. Additionally, the use of robotics allows for safe and efficient access to difficult-to-reach areas, such as roofs or tall buildings, without the need for scaffolding or other access equipment.

Concluding, the use of 2D or 3D LiDAR and FLIR thermal cameras in inspection tasks provides a powerful tool for analyzing the environment and identifying potential issues in a fast and efficient manner. With advances in this field, we can expect to see even more sophisticated and capable inspection systems in the future.

About the Author

Salman Sohail is a robotics engineer with strong organizational abilities and experience overseeing a multitude of industrial, research, and academic projects. He is experienced with the latest cutting-edge development tools and procedures for robotic development, including software development, hardware integration, technical documentation, and press release.Read full article

Hide full article

Discussion (0 comments)