reVISION simplifies FPGA-based vision system development

March 16, 2017

on

on

FPGA application design has never been easy and the fact that devices keep growing bigger and more powerful doesn’t help to improve the situation. More powerful devices result in more demanding applications which in return lead to even more powerful devices, an vicious loop. The engineers developing these increasingly complex applications do not have the time to study in detail the hardware that makes it all possible, and so, to help them out, hardware manufacturers provide high-level tools, libraries, stacks, IP blocks, and the like.

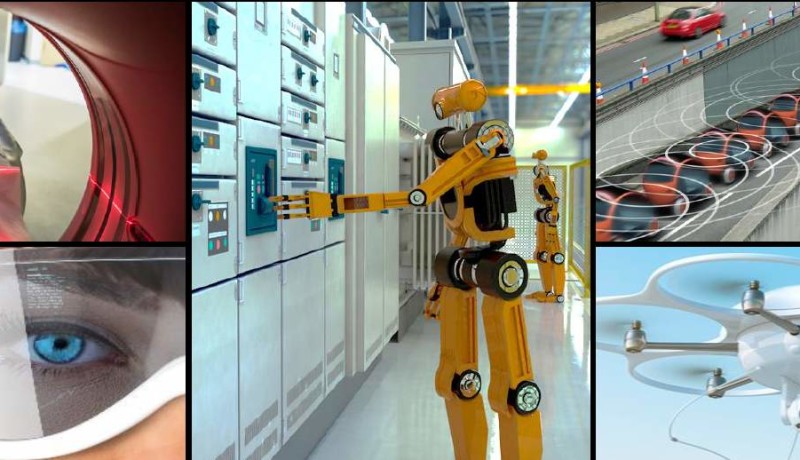

With this in mind FPGA pioneer Xilinx has now launched the reVISION stack for intelligent vision guided systems. The new stack complements their Reconfigurable Acceleration Stack targeted at machine learning applications. Possible applications include collaborative robots or ‘cobots’, ‘sense and avoid’ drones, augmented reality, autonomous vehicles, automated surveillance and medical diagnostics.

The new stack not only allows systems engineers with little or no hardware design expertise to develop complex applications using a C/C++/OpenCL development flow, it is also a highly optimised stack enabling more responsive vision systems. According to the manufacturer, compared to competing GPUs and SoCs like the Nvidia Tegra, reVISION features up to 6x better images/second/watt in machine learning inference, 40x better frames/second/watt of computer vision processing, and 1/5th the latency over competing embedded GPUs and typical SoCs.

Despite these efforts FPGA application design is still not easy, but it is a step in the right direction.

With this in mind FPGA pioneer Xilinx has now launched the reVISION stack for intelligent vision guided systems. The new stack complements their Reconfigurable Acceleration Stack targeted at machine learning applications. Possible applications include collaborative robots or ‘cobots’, ‘sense and avoid’ drones, augmented reality, autonomous vehicles, automated surveillance and medical diagnostics.

The new stack not only allows systems engineers with little or no hardware design expertise to develop complex applications using a C/C++/OpenCL development flow, it is also a highly optimised stack enabling more responsive vision systems. According to the manufacturer, compared to competing GPUs and SoCs like the Nvidia Tegra, reVISION features up to 6x better images/second/watt in machine learning inference, 40x better frames/second/watt of computer vision processing, and 1/5th the latency over competing embedded GPUs and typical SoCs.

Despite these efforts FPGA application design is still not easy, but it is a step in the right direction.

Read full article

Hide full article

Discussion (0 comments)