Software-Defined Vehicles: Sensor Fusion and EE Architecture Explained

on

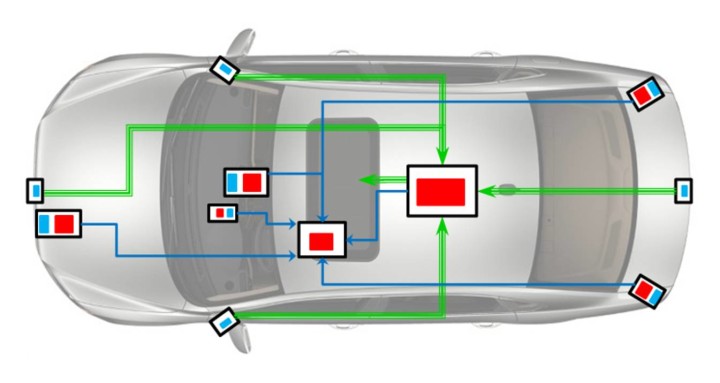

Modern vehicle electronics are shifting from isolated, single-function ECUs toward software-defined vehicles’ architectures that share sensor data across the entire vehicle. This shift is driven by the volume and complexity of data from cameras, radar and LiDAR, as well as the need for reliable perception in ADAS and autonomous driving features. The video below explains how sensor fusion works in practice and how EE architectures are evolving to handle these new demands.

Video: Software-Defined Vehicles with Sensor Fusion and EE Architecture

From Single-Function ECUs to Central Compute

Earlier systems used individual ECUs for specific functions, each connected to a small sensor producing minimal data. Modern vehicles employ high-resolution sensors that must feed multiple systems simultaneously. This requires greater bandwidth and more flexible communication networks.

What Drives the Need for Sensor Fusion

Each sensor type has strengths and weaknesses. Radar offers accurate distance but poor angle resolution. Cameras provide rich visual detail but weaker depth information. LiDAR adds geometric precision at the cost of data size. Fusion algorithms combine these strengths to improve detection accuracy, reduce noise and avoid false alarms.

Placement of Processing Power

Some pre-processing happens within sensors themselves, but as automation levels increase, more computation moves to domain controllers or central high-performance computing units. Where fusion occurs varies by design, balancing data volume, compute load and system timing.

Data Output and Representation

Sensor fusion software can produce either object lists or lower-level grid/cell representations, depending on the needs of the driving function. Higher automation typically requires richer, lower-level representations that preserve more environmental detail.

Safety and Software Assurance

Because these systems influence vehicle control, development follows automotive safety standards such as ISO 26262. Testing uses both simulation and real driving data to reveal edge cases that cannot be reliably produced artificially.

Why This Matters

Software-defined architectures allow higher-quality perception with fewer redundant sensors, and enable features to evolve through software updates. This flexibility is central to the ongoing development of ADAS and autonomous systems.

Discussion (0 comments)