Artificial or Artsificial? A Cheerful Look at Early AI Coverage in Elektor

on

It’s easy to forget that the basics of today’s hype-of-hypes, artificial intelligence (AI), can be traced back to early embedded computing, neural networks, and even fuzzy logic. Long before ChatGPT and deep learning dominated headlines, electronics enthusiasts were already exploring the frontiers of machine intelligence through practical projects and theoretical discussions. Here are examples from Elektor’s archives spanning nearly two decades of AI exploration, mainly “for smirk’s sake” but also to appreciate how prescient these early pioneers were.

The Foundation Years (1975-1981)

What Is Cybernetics? (1975)

The journey begins with one of Elektor’s earliest forays into intelligent systems. This foundational article introduced readers to cybernetics — the study of communication and control in machines and living beings. Even in 1975, the magazine was exploring how feedback loops and self-regulating systems could create more intelligent behavior in electronic devices.

The Elektor Intelekt Chess Computer (April 1981)

This article features the Intelekt chess computer built around the Intel 8088 microprocessor executing a ported version of Tiny Chess. The design emphasizes reasonable speed and “intelligence” levels, making it a strong opponent for many chess enthusiasts. Simply because the lab prototype of the Intelekt survived several relocations and massive cleanouts of the Elektor Lab, this famous item was revisited as part of the Retronics series (2004–2020). Decide for yourself if the chess player called the 8088 is intelligent, artificially intelligent, quasi-intelligent, or just following dumb Von Neumann strategies coded in an EPROM.

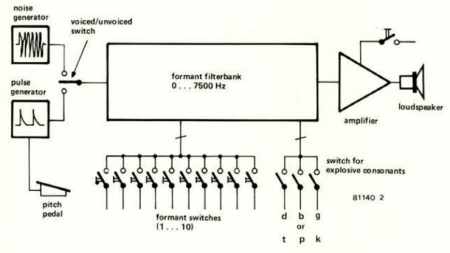

Talk to Computers (1981)

In the same era, Elektor was already tackling human-computer interaction with speech recognition and synthesis. This two-part series (Part 1 and Part 2) explored how computers could understand and respond to human speech — a challenge that would remain at the forefront of AI research for decades.

The Expansion Era (1986-1990)

The Future for Artificial Intelligence (1986)

As the 1980s progressed, Elektor began taking a more strategic view of AI’s potential. This article examined where artificial intelligence was heading and what breakthroughs might be possible in the coming years.

Speech Recognition System (1987)

Building on earlier work in human-computer interaction, this practical project showed readers how to build their own speech recognition system — bringing AI capabilities to within reach of electronics enthusiasts.

Artificial Intelligence (May 1988)

By Mark Seymour, this comprehensive article discusses the potential for creating machines that could think independently, without needing to be programmed for each task. It outlines the historical context of AI, including early developments and the revival of neural computing, which aimed to replicate human brain functions. The article suggests that while true AI had eluded researchers, the technology was evolving toward machines that could learn by example rather than through explicit programming. The article elaborates on neural computers that mimic human brain neural networks, highlighting advancements in processing speed and problem-solving capabilities. It also touches on the challenges of constructing large neural networks and the applicability of these technologies in various complex tasks, including speech recognition and pattern processing. In retrospect, this article was remarkably forward-looking, not only discussing the practicalities of building a neural network but also elaborating on potential improvements in AI through neural computing.

Simulating Sight in Robots (May 1988)

In the same May 1988 edition, Arthur Fryatt published “Simulating Sight in Robots,” which today would easily fit under “edge computing.” More a news item than practical insight, the article discussed a vision sensing system providing color quality control for grading fruit and vegetables in the Autoselector, a joint development involving the Essex Electronics Centre (a department of the University of Essex) and Loctronic Graders. Their collaboration initially led to the introduction of the Autoselector A, which employed a monochrome television imaging technique to detect differences in the grayscale. Subsequently, with the introduction of the Autoselector C, a very significant advance was achieved with color imaging, which enabled up to 4,096 colors and shades to be identified in areas as small as 3 mm diameter at very high speed. Since the entire area of the product needs to be scanned, Loctronic Graders developed the Thrudeck, which presents constantly revolving products such as tomatoes, onions, kiwis, or citrus fruits at speeds up to 2,500 per minute to the camera. Even though the products are of irregular shape, the system can track, size, and count each one as it follows a meandering path down the deck.

Intelligence, Intentionality, and Self Awareness (1989)

This philosophical exploration delved deeper into what it truly means for a machine to be intelligent. The article examined concepts of intentionality and self-awareness — questions that remain central to AI debates today.

Computers Learn from Human Mistakes (1990)

As the decade closed, this article explored how computers could improve their performance by learning from errors — a concept that would later become fundamental to machine learning algorithms.

The Neural Network Renaissance (2001-2003)

Neural Networks in Control (2001)

As the new millennium began, neural networks experienced renewed interest. This article examined their application in control systems, showing how biologically inspired computing could solve complex engineering problems.

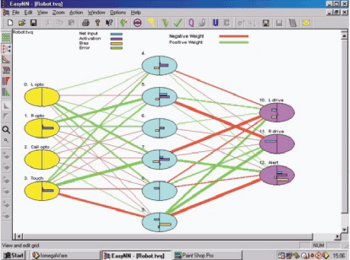

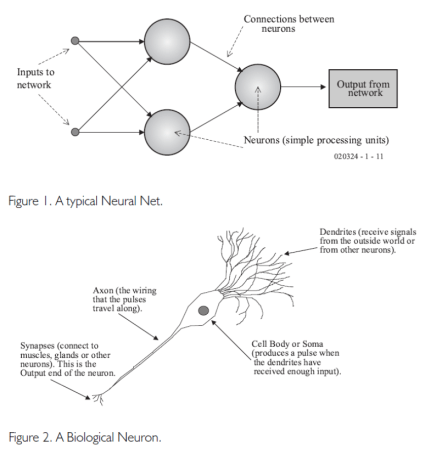

Practical Neural Networks Series (2003)

This comprehensive series by Chris McLeod and Grant Maxwell, graduates of Robert Gordon University Aberdeen, brought neural networks to within reach of amateur enthusiasts. The series was published in four parts:

- An Introduction to Neural Nets

- Back Propagation Neural Nets

- Feedback Nets and Competitive Nets

- Applications and Large Neural Nets

“Artificial neural networks (neural nets or just ANNs for short),” they kicked off, “are a popular form of artificial intelligence (AI). They are based on the operation of brain cells, and many researchers think that they are our best hope for achieving true intelligence in a machine. If you’re a fan of the TV Series Star Trek, you’ll know that Data is supposed to have a neural net brain, as is the robot in the Terminator films. Although these technological wonders are on the cutting edge of research in computer science, they are also well within the grasp of the enthusiastic amateur. The aim of these articles is to introduce this fascinating topic in a practical way, which will allow you to experiment with your own neural nets.”

The authors concluded in part 4 that “The neural net can be considered as a universal logic system, capable (providing that the network has three layers) of learning to produce any truth table you like. If the output of the neurons is a sigmoid function, then it acts rather like fuzzy logic, and is able to produce analog outputs — which can be useful for handling problems, in the real world, which are not black and white.”

Remarkably, the series had a course support website hosted by Robert Gordon University, though that website has since vanished from the net.

Looking Back, Looking Forward

What’s remarkable about this collection of articles spanning nearly three decades is how many of the core concepts remain relevant today. From cybernetics and feedback systems to neural networks and machine learning from human mistakes, Elektor’s contributors were exploring ideas that would eventually become the foundation of modern AI.

The chess computer’s question of whether following programmed strategies constitutes “intelligence” echoes in today’s debates about large language models. The vision systems for fruit sorting presaged today’s computer vision applications. The neural network series anticipated the deep learning revolution that would transform AI decades later.

Perhaps most importantly, these articles maintained a practical, hands-on approach that encouraged readers to experiment and build their own intelligent systems. In an age where AI can seem mysterious and inaccessible, Elektor’s legacy reminds us that understanding comes through doing — and that the path from “artsificial” to artificial intelligence is paved with curiosity, experimentation, and the occasional smirk at our early attempts to create thinking machines.

The question remains: Were these early systems truly intelligent, or just very clever programming? The same question we’re still asking today suggests that perhaps the journey toward artificial intelligence has always been more about asking the right questions than finding definitive answers.

Editor's Note: This article (250736-01) appears in the Edge Impulse Guest-Edited edition of Elektor, which will be published in December 2025.

Discussion (0 comments)