We’re Not Innovating, We’re Just Forgetting Slower

on

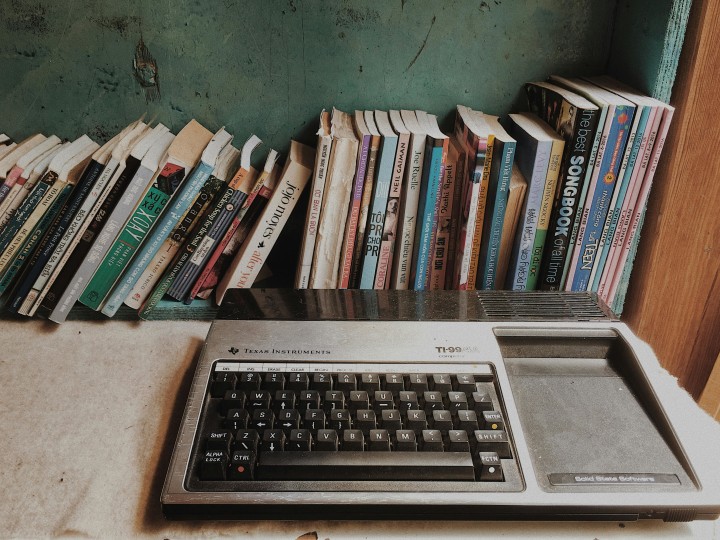

My Texas Instruments TI-99/4A Home Computer still boots. Forty-one years after I first plugged it into our family’s wood-grain TV, it fires up in less than two seconds, ready to accept commands in TI BASIC. No updates required. No cloud connectivity. No subscription fees. No ads. Just pure, deterministic computing that does exactly what you tell it to do, every single time.

Meanwhile, my Google Nest Wi-Fi router is no longer able to create a PPPoE connection using my fibre modem after a “helpful” update from my ISP. But my PCs can still create a PPPoE connection, so I’ve wired the modem straight into the PC. Which means no Google Nest Wi-Fi. Which means all those Wi-Fi lightbulbs in my home? Not working right now. My fault — I know.

This isn't nostalgia talking — it's a recognition that we’ve traded reliability and understanding for the illusion of progress. Today’s innovation cycle has become a kind of collective amnesia, where every few years we rediscover fundamental concepts, slap a new acronym on them, and pretend we’ve revolutionized computing. Edge computing? That’s just distributed processing with better marketing. Microservices? Welcome to the return of modular programming, now with 300% more YAML configuration files. Serverless? Congratulations, you’ve rediscovered time-sharing, except now you pay by the millisecond.

The Longevity Test

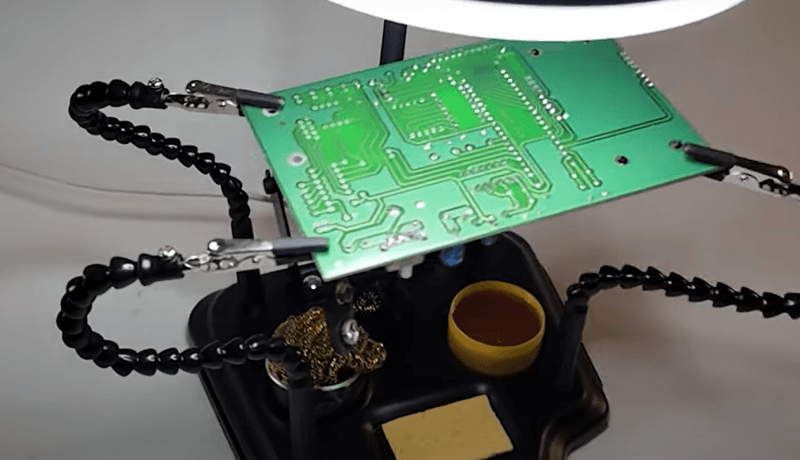

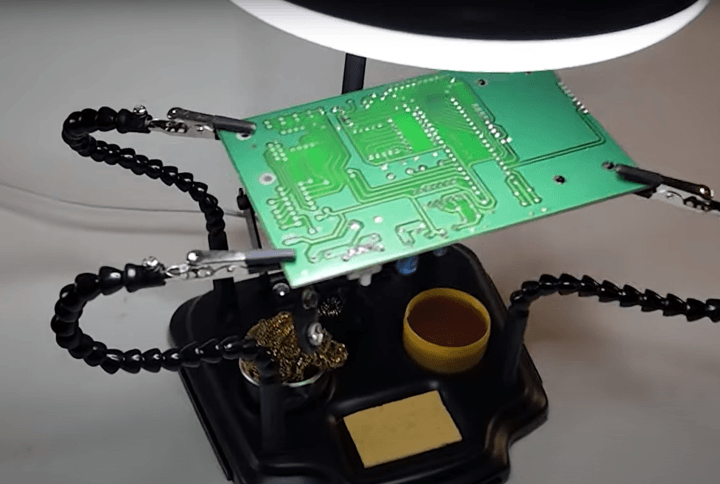

There’s something deeply humbling about opening a 40-year-old piece of electronics and finding components you can actually identify. Resistors, capacitors, integrated circuits with part numbers you can look up. Compare that to today’s black-box system-on-chip designs, where a single failure means the entire device becomes e-waste. We’ve optimized for manufacturing efficiency and planned obsolescence while abandoning the radical idea that users might want to understand — or, heaven forbid, repair — their tools.

The VHS player in my basement could be fixed with a screwdriver and a service manual (OK, sometimes an oscilloscope). Meanwhile, my Wi-Fi router requires a PhD in reverse engineering just to figure out why it won’t connect to the internet. (Google is less than helpful with a dumbed-down user interface that basically tells you that “something went wrong.”) We’ve mistaken complexity for sophistication and abstraction for advancement.

This isn't just about hardware. Software has followed the same trajectory, piling abstraction upon abstraction until we’ve created a tower of dependencies so precarious that updating a single package can break an entire application. Modern developers debug through seventeen layers of frameworks to discover that their problem is a missing semicolon in a configuration file generated by a tool that abstracts away another tool that was created to simplify a process that was perfectly straightforward twenty years ago.

The AI Hype Machine

Nothing illustrates our forgetting problem quite like the current AI discourse. Large language models are impressive statistical text predictors — genuinely useful tools that excel at pattern matching and interpolation. But, listening to the breathless coverage from tech journos who couldn’t explain the difference between RAM and storage if their click-through rates depended on it, you’d think we’d achieved sentience in a server rack.

The same publications that use “disruptive AI” unironically are the ones that need to Google “what is a neural network” every time they write about machine learning. They've turned every statistical improvement into a breathless announcement about the future of humanity, completely missing the fact that we’re applying decades-old mathematical concepts with more compute power. It’s less magic and more linear algebra at scale.

This wouldn’t matter if it were just marketing hyperbole, but the misunderstanding has real consequences. Companies are making billion-dollar bets on technologies they don’t understand, while actual researchers struggle to separate legitimate progress from venture capital fever dreams. We’re drowning in noise generated by people who mistake familiarity with terminology for comprehension of the underlying principles.

Maker Culture vs. Making Things

The “maker movement” has become another victim of our forgetting problem. What started as a legitimate return to hands-on engineering has been co-opted by influencer culture, where the goal isn’t to build something useful but to generate content about building something photogenic. Scroll through maker TikTok and you'll find endless videos of people hot-gluing LEDs to fruit, calling it “innovative hardware hacking” while completely missing the actual engineering happening in labs and workshops around the world.

Real making is messy. It involves understanding material properties, thermal characteristics, failure modes, and the thousand small decisions that separate a working prototype from an expensive paperweight. It requires reading datasheets, calculating tolerances, and debugging problems that don’t have Stack Overflow answers. None of this photographs well or fits into a sixty-second video format.

The shallow version of maker culture treats engineering like a lifestyle brand — all the aesthetic appeal of technical competence without the years of study required to develop it. It’s cosplay for people who want to look innovative without doing the unglamorous work of actually understanding how things function.

The Knowledge Drain

We’re creating a generation of developers and engineers who can use tools brilliantly but can't explain how those tools work. They can deploy applications to Kubernetes clusters but couldn’t design a simple op-amp circuit. They can train neural networks but struggle with basic statistics. They can build responsive web applications but have never touched assembly language or understood what happens between pressing Enter and seeing output on screen.

This isn't their fault — it's a systemic problem. Our education and industry reward breadth over depth, familiarity over fluency. We’ve optimized for shipping features quickly rather than understanding systems thoroughly. The result is a kind of technical learned helplessness, where practitioners become dependent on abstractions they can’t peer beneath.

When those abstractions inevitably break — and they always do — we’re left debugging systems we never really understood in the first place. The error messages — if we’re lucky enough to get one — might as well be written in hieroglyphics because we’ve lost the contextual knowledge needed to interpret them.

What We Need Now

We need editors who know what a Bode plot is. We need technical writing that assumes intelligence rather than ignorance. We need educational systems that teach principles alongside tools, theory alongside practice. Most importantly, we need to stop mistaking novelty for innovation and complexity for progress.

The best engineering solutions are often elegantly simple. They work reliably, fail predictably, and can be understood by the people who use them. They don't require constant updates or cloud connectivity or subscription services. They just work, year after year, doing exactly what they were designed to do.

That TI-99/4A still boots because it was designed by people who understood every component, every circuit, every line of code. It works because it was built to work, not to generate quarterly revenue or collect user data or enable some elaborate software-as-a-service business model.

We used to build things that lasted. We can do it again—but first, we need to stop forgetting what we already know.

The author spent fifteen minutes trying to get his smart doorbell to connect to Wi-Fi while writing this. The irony was not lost on him.

Discussion (4 comments)

Joseph Davis 1 month ago

One problem is 'decision makers' all too often think they know better, the business guru who took over my IT department, after the engineers all retired, had no idea of how little he knew, because business schools in general, can't teach anything real, just techniques for quantifying productivity in arbitrary ways.

This was made crystal clear to me when he demanded I modify my database code for tracking user accounts to prevent users from having multiple accounts, an ongoing nuisance, not a bad problem, but politically loud. The solution that he knew was better than my CS degree, was to embed usernames into the record of people tracked. I suppose for his spreadsheet training from business school, it was a brilliant idea, and he most certainly considered himself brilliant, nobody dared question him. Of course the n-dimensional relational database is not a spreadsheet, but for him, I guess a database base is a database, 2 dimensional or not. He never forgave me for not hailing his brilliance, especially when I just modified to account management interfaces to disallow multiple accounts, except of course for system administrators that also had root and such. His solution would have made root un-trackable...

People don't rise in political influence, in business or governance on their technical skills, but on their personality and skills at selling themselves, their character, and the unwise don't check up to see if the 'leader' is properly skilled, they just love those slogans, or the winning personality. If you do point out one of their failings, you are the complainer, and people ignore you. Good managers or politicians don't decide what to do, they use their personality skills to coordinate their team of technical people, who do find the solutions, that can then be implemented. Hard to find in a culture of pride on steroids...

The only solution I see is an educated populace, and here in the USA, modern college degrees are probably not even as good as a high school diploma from 50 years ago, in the sense of understanding logic, rhetoric, and critical thinking. Considering politicians get votes by blind popular slogans, and critical thinking is their enemy, so they have no motivation to actually improve the educational system.

I say all this because I agree with Brian's points, and further, such principals apply in every way, material, spiritual, political, and personal. From science to religion, using 'it' to support your preconceptions and views is the bad version, using it to challenge yourself and evolve towards reality is the good version.

Content Director, Elektor 3 weeks ago

Dirk Haratz 1 week ago

Brian Tristam Williams 1 week ago